The last week or so I’ve been playing “Squirrel!!!” as I chase from one project possibility to another. The warp currently on Grace is about to come off, giving me space for one 29″ wide project or two 14.5″ projects. What luxury! So I have been chasing squirrel after squirrel as I attempt to choose between a dizzying array of options.

I have also been experimenting with AI prompts. I know people have lots of reservations about AI (I do too), but it’s very clear to me that AI is here to stay. So either I sit down now and learn how to use it, or I’ll be forced to later, after it transforms the way we work, find information, and make new things. Given that, I’d prefer to be ahead of the curve.

Above and beyond that, I think ChatGPT and DALL-E are fascinating tools and I’m intrigued by the possibilities they create.

So here is the tale of my afternoon with AI.

I was thinking of putting on another “fire” warp. For those who missed the first one, here’s what it looked like:

This one was composed of four different yarns wound together: silk, unmercerized cotton, and one fine/one thicker mercerized cotton. When I dyed it, they took up dye at different rates, producing the lovely variegated effect you see.

That warp never did get woven, for a variety of reasons, and I finally cut it off Maryam when I sold her. But I was thinking it might be time to revisit it, because it was So. Bloody. Pretty.

However, I felt really stuck for what to weave with it.

Oh, there were the obvious things – California poppies, tigers, phoenixes – all things that would be fun and easy to weave. But I was looking for something with more complexity, meaning, and technical challenge than those.

And since I wanted to play around with AI anyway, I thought I’d take the question to ChatGPT and DALL-E.

I started by asking DALL-E to brainstorm five ideas for imagery I could create with red, orange, yellow, and black. It promptly spat back these ideas:

The mandala sounded fun, but I thought it might be hard to make something that I’d consider meaningful or interesting. However, the geometric animal art sounded intriguing.

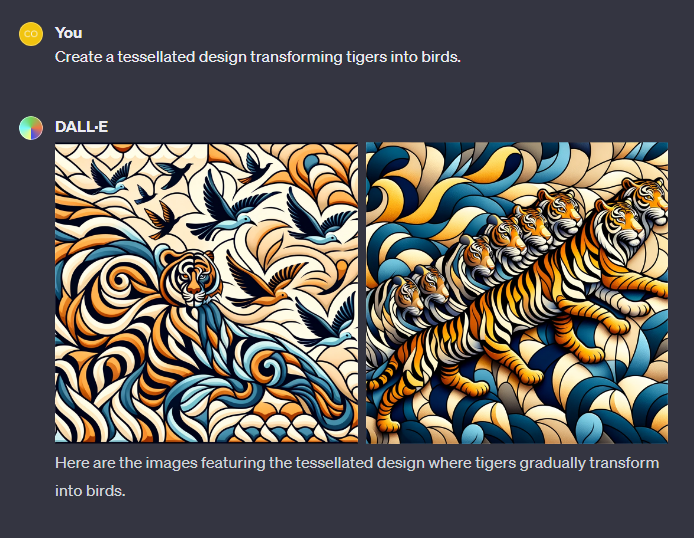

I thought of M.C. Escher’s famous tessellation of fish transforming into geese, and wondered if DALL-E could make something similar.

Nope.

It wasn’t really a great prompt, but I’m not sure it would have done much better with a more detailed one.

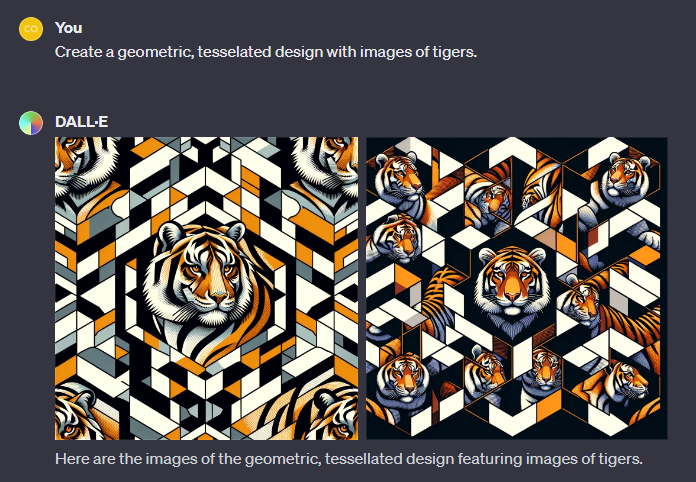

I wasn’t terribly enchanted with the idea anyway – it struck me as an interesting but already-done gimmick – but the idea of tesselations and tigers fascinated me for some reason, so I tried again:

This was not at all what I’d been expecting; I wanted a tiger made out of geometric shapes.

Back to DALL-E:

The first image intrigued me, particularly the way the tiger in the center “hid” in the background.

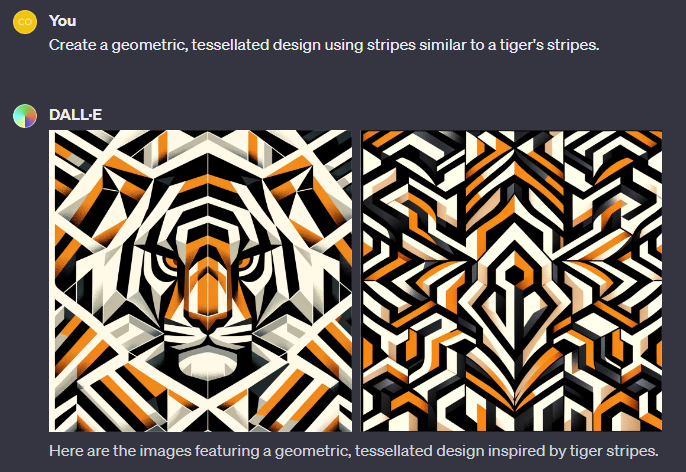

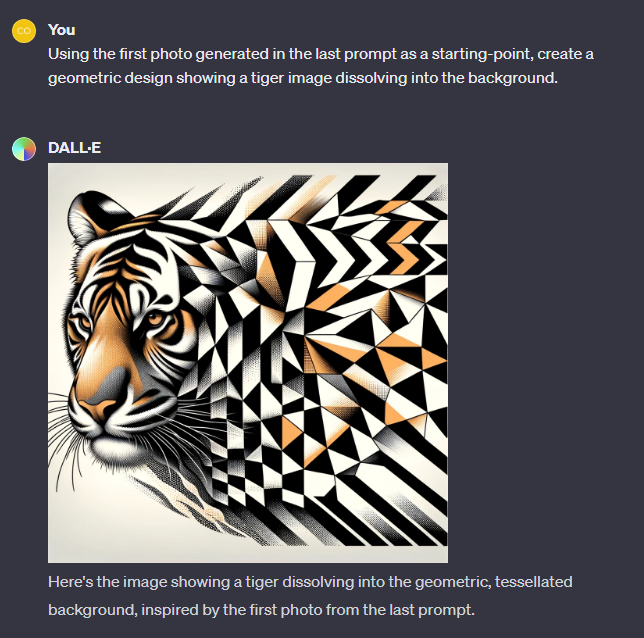

Still thinking about the tessellations and the transforming tigers, I sent this prompt to DALL-E:

Now I was getting to something that might be interesting.

But I thought the geometric patterns had outlived their design usefulness as I continued to explore; they clashed with the smooth curves of the tiger.

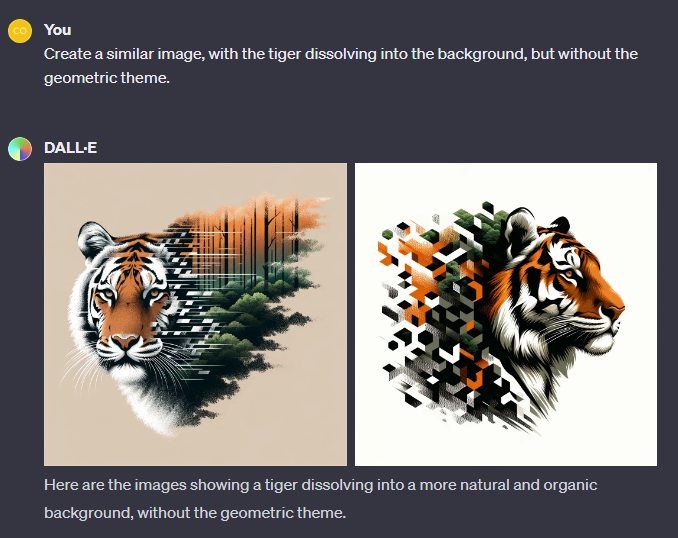

So I told DALL-E to ditch the geometric stuff and just do a dissolving tiger.

These decidedly did not inspire me; the idea of a tiger disappearing into a burning forest is hardly new.

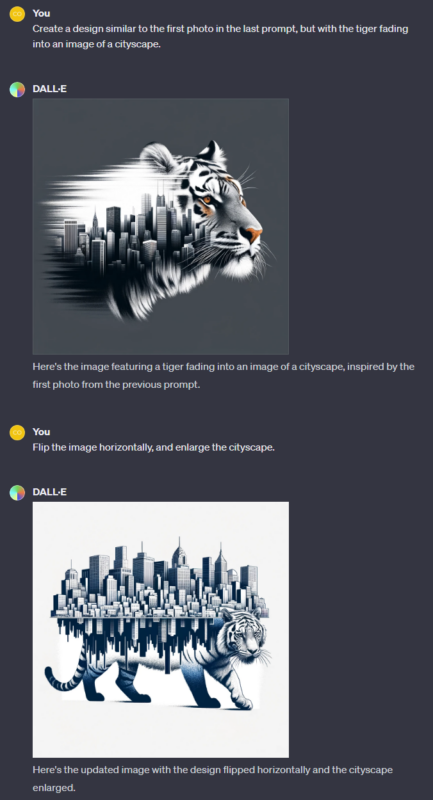

I poked around the dissolving tiger theme a bit more, with the idea of tigers fading into a city rather than a forest. This was hardly any more original, but I was enjoying playing around.

That last photo had me wondering if DALL-E was drunk and should go home for the night!

I decided that the cityscape wasn’t the solution. What about other things that symbolized modern technology?

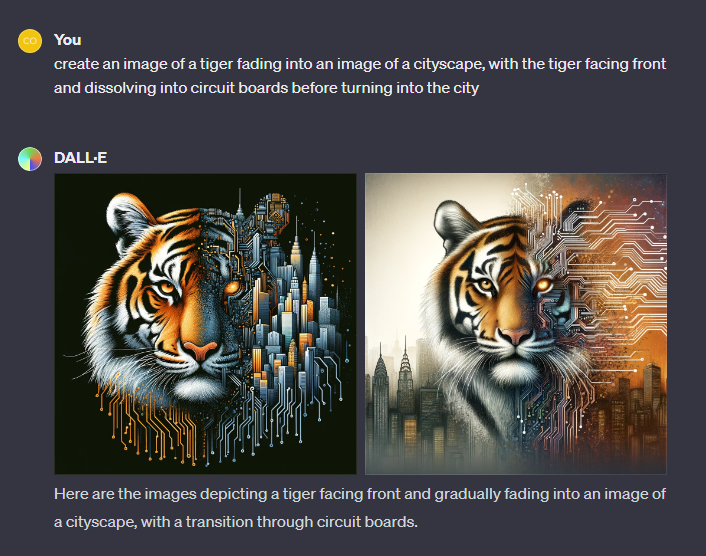

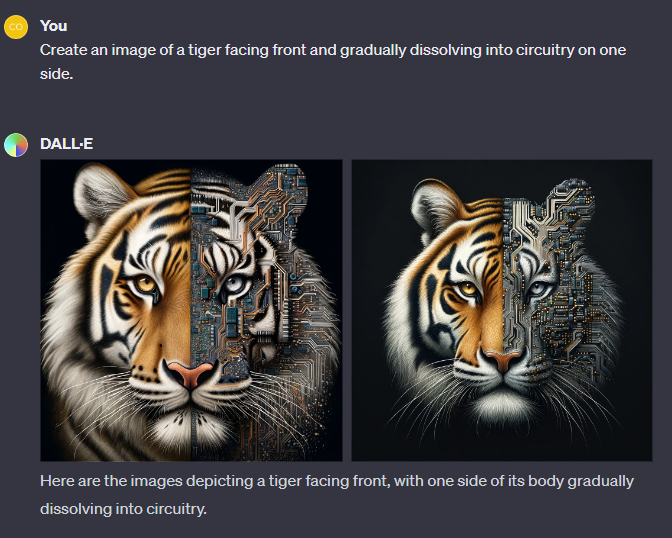

Back to DALL-E with another request!

Holy wow! I LOVED the image on the left. Especially the fiery glow of the eyes and the circuitry on the “cyborg” half of the tiger.

I wasn’t wild about the cityscape, though, so I asked DALL-E to eliminate it, and just do the circuitry:

Uh….nice try, DALL-E…but I didn’t mean literal circuit boards.

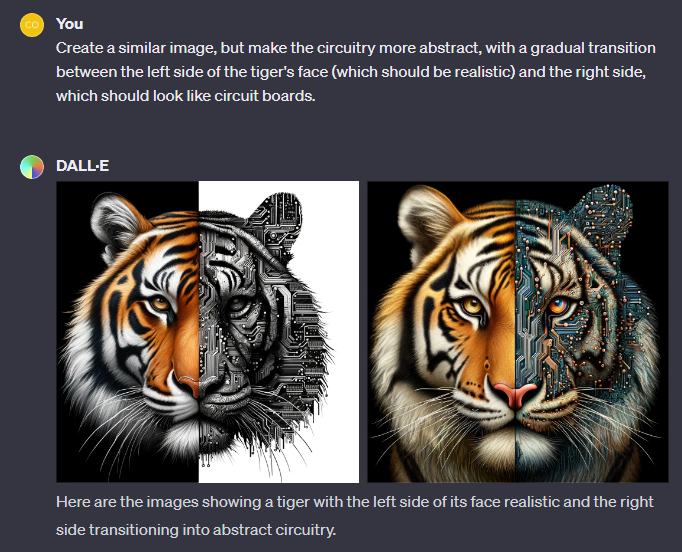

Back for another try:

Closer. I did a few more iterations, and arrived at this:

Even closer…but still not quite.

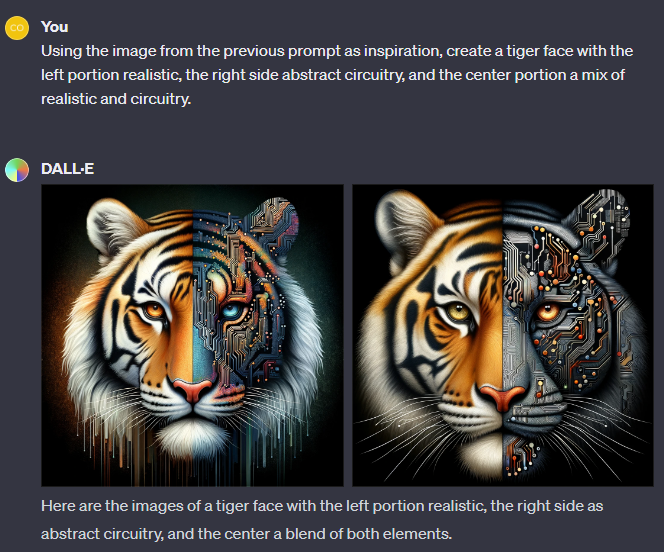

A few more iterations, and I got this:

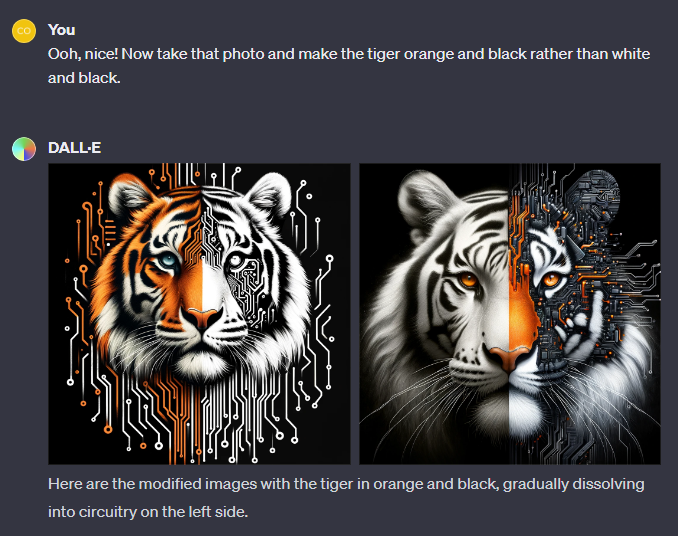

The right-hand photo was just about ideal, but DALL-E clearly hadn’t gotten the memo about making the tiger orange.

By this point I’d realized that DALL-E was pretty good at brainstorming ideas but not much good at editing to command. So I went to Photoshop, and fiddled with the tiger to add some color. (I’m pretty sure that color-fiddling was “enhanced” by AI as well, behind the scenes.)

I now had this:

A title popped to mind: AI: The Nature of the Beast. I was thinking vaguely of how the living tiger was converted to binary bits as AI gradually took over the world – or something like that.

I showed it to some friends, and one of them said, “What would happen if you flipped the design horizontally?”

Flipping it around, of course, made the starting-point the cyborg, rather than the tiger, since we (or at least I) read left to right. This seemed even more thematically interesting, although the reverse of what I had been thinking: now the AI was coming to life and becoming the “real” tiger.

The design also posed some interesting technical opportunities and challenges. I could use brushed mohair to create the appearance of tiger fur. I could use metallic yarns, or LEDs, or silkscreened circuitry, to enhance the cyborged half. I could do ALL THE THINGS!!!

So that was my afternoon with AI. It only took me about 40 minutes to go from start to finish with all this. The design isn’t done by any means; it’s way too complex and detailed to weave as it stands, and converting it to jacquard and adding metallics, screen printing, etc. would all take a ton of work.

That’s fine – I’m not really interested in going straight from AI-generated image to the loom. I feel there should be “hand of the maker” involved, and I also don’t like using the jacquard as a low-resolution printer. It’s a very common way that people weave on jacquard looms, but I also think it’s one of the least interesting. So I will likely do considerable modifications if I weave this design.

Musings

DALL-E served as an amazing way to generate and develop ideas. While it doesn’t produce anything on its own, and its original images weren’t thrilling, I managed to explore ten or twenty evolving ideas very rapidly, and wound up with something I found both interesting and worth exploring/working with further. It’s now in my “ideas” folder and I may very well weave it.

I don’t think that AI is going to replace artists. What it is going to do is reduce the skill required to make art. Instead of having to be an expert with a paint brush or with Photoshop/Illustrator, you can simply give it your idea and get one – or five, or fifty – possible directions you could go with it. You can then work with it to refine your idea further.

This version of DALL-E isn’t very good at modifying existing images, but that is coming. Adobe has already added AI features to Photoshop that allow you to circle an area, type in “Add a blue house with glowing windows,” and it will seamlessly add a blue house with glowing windows. Or replace a stop sign with trees, or whatever else you like.

My view on DALL-E and similar AIs, at least for now, is that they are likely to do for digital art what Photoshop did for traditional photography. Photoshop removed the need to have incredible technical skills at shooting, developing, and printing photos, because if you didn’t like something, you could always “Photoshop it” to fix lighting, add elements, etc. It didn’t remove the urge to create, it made it easier to create and reduced the skills required to create something you liked.

I personally really like DALL-E. That’s because I have a lot of ideas that I can’t give voice to because I don’t have the drawing skills. I mean, I can barely draw stick figures. But an afternoon playing with DALL-E enabled me to explore a lot of ideas, visually, that I could never have explored otherwise, because I simply didn’t have the skills.

Is that “cheating”? I don’t think it is. It’s simply a tool that makes it easier for me to create.

I love it.

P.S. Yes, I know there are a lot of ethical issues around copyrighted images and content being used without permission to train the models. As an artist and a writer, I do get (and share) the concerns. However, I also think those issues will be sorted out in time, and I also think that, either way, AI is here to stay.

I’ve been creating international AI by having friends in other countries contribute. It’s addictive. Since time immemorial artists have used inspiration from other sources including other artists, As with all technology, it will go on with or without our approval. Better learn to incorporate it than ignore it.

You could weave part of it, screen print or katazome part and/or embroider some detail .

It’s a great design and could be very interesting and challenging to produce

Hadn’t thought of incorporating multimedia techniques! Couching would be a really good way to add real circuitry. Will definitely have to think about that!

Could you load a photo or chart of one of your finished pieces into the AI as a base and ask to modify it?

I don’t know! I’ll experiment with uploading photos, it might be interesting.

Would you like an original digital drawing of your concept? It would remove concerns about copyright, avoid the AI artifacts like weird ears and nonsensical lines, and allow you to make detailed refinements. Pick a tiger species, set the level of detail, compare sketches, add a grid.

(It’s presumptuous, but I’d love to draw such a thing!)

The computer engineer part of me loves the idea of encoding a simple message or making symbolic choices in the depiction of circuitry. Heck, you could even include a tiny bit of functional magnetic-core memory, like the textile workers who built the Apollo guidance computers. :3

On that note: LEDs are certainly possible, just plan to spend more time than you expect researching your hardware. Fiber optics might be neat. EL wire is still sold but it is Not worth your consideration (this post gives a good breakdown: https://enlighted.com/blog/why-i-don-t-offer-el-wire-anymore)

anyway I love this and I have no chill

Thank you so much for this (and the offer!). I think I’m going to stick with DALL-E for now as it’s possible to iterate designs much faster (and with less effort) than digital drawing. While I love that you’re willing to draw designs for me (how generous is that???) I will often go through six or seven design ideas in a day, and I can’t imagine anyone else putting up with that. I really appreciate the offer, though.

I LOVE the idea of encoding a simple message in the circuitry design. Alexandra (my wife) can design circuits so I’ll ask her more about it.

Fiber optics works really well – Laurie Carlson-Steger and I collaborated on a piece with fiber optics a while back, nicking the cable where we wanted light to come out. The only problem is that you need to hook up a light engine, which means powering it, which is difficult to do in an exhibit. (Same thing for LEDs.)

I hadn’t known that about EL wire, so thanks for the heads-up. I’ll check out the blog post now.

I can’t wait to see that amazing tiger worked up!